The rubbish collector is a posh piece of equipment that may be tough to tune. Certainly, the G1 collector alone has over 20 tuning flags. Not surprisingly, many builders dread touching the GC. When you don’t give the GC just a bit little bit of care, your entire software may be working suboptimal. So, what if we let you know that tuning the GC doesn’t need to be onerous? Actually, simply by following a easy recipe, your GC and your entire software may already get a efficiency enhance.

This weblog submit reveals how we bought two manufacturing functions to carry out higher by following easy tuning steps. In what follows, we present you ways we gained a two instances higher throughput for a streaming software. We additionally present an instance of a misconfigured high-load, low-latency REST service with an abundantly giant heap. By taking some easy steps, we lowered the heap measurement greater than ten-fold with out compromising latency. Earlier than we accomplish that, we’ll first clarify the recipe we adopted that spiced up our functions’ efficiency.

A easy recipe for GC tuning

Let’s begin with the components of our recipe:

Moreover your software that wants spicing, you need some option to generate a production-like load on a check surroundings – until feeling courageous sufficient to make performance-impacting adjustments in your manufacturing surroundings.

To guage how good your app does, you want some metrics on its key efficiency indicators. Which metrics rely upon the particular objectives of your software. For instance, latency for a service and throughput for a streaming software. Moreover these metrics, you additionally need details about how a lot reminiscence your app consumes. We use Micrometer to seize our metrics, Prometheus to extract them, and Grafana to visualise them.

Together with your app metrics, your key efficiency indicators are coated, however ultimately, it’s the GC we like to boost. Until being curious about hardcore GC tuning, these are the three key efficiency indicators to find out how good of a job your GC is doing:

- Latency – how lengthy does a single rubbish gathering occasion pause your software.

- Throughput – how a lot time does your software spend on rubbish gathering, and the way a lot time can it spend on doing software work.

- Footprint – the CPU and reminiscence utilized by the GC to carry out its job

This final ingredient, the GC metrics, may be a bit tougher to search out. Micrometer exposes them. (See for instance this weblog submit for an outline of metrics.) Alternatively, you may get hold of them out of your software’s GC logs. (You’ll be able to seek advice from this text to discover ways to get hold of and analyze them.)

Now we have now all of the components we want, it’s time for the recipe:

Let’s get cooking. Hearth up your efficiency checks and preserve them working for a interval to heat up your software. At this level it’s good to put in writing down issues like response instances, most requests per second. This manner, you possibly can evaluate totally different runs with totally different settings later.

Subsequent, you establish your app’s dwell knowledge measurement (LDS). The LDS is the dimensions of all of the objects remaining after the GC collects all unreferenced objects. In different phrases, the LDS is the reminiscence of the objects your app nonetheless makes use of. With out going into an excessive amount of element, you will need to:

- Set off a full rubbish accumulate, which forces the GC to gather all unused objects on the heap. You’ll be able to set off one from a profiler corresponding to VisualVM or JDK Mission Management.

- Learn the used heap measurement after the complete accumulate. Below regular circumstances it is best to be capable of simply acknowledge the complete accumulate by the massive drop in reminiscence. That is the dwell knowledge measurement.

The final step is to recalculate your software’s heap. Most often, your LDS ought to occupy round 30% of the heap (Java Efficiency by Scott Oaks). It’s good follow to set your minimal heap (Xms) equal to your most heap (Xmx). This prevents the GC from doing costly full collects on each resize of the heap. So, in a formulation: Xmx = Xms = max(LDS) / 0.3

Spicing up a streaming software

Think about you could have an software that processes messages which can be printed on a queue. The applying runs within the Google cloud and makes use of horizontal pod autoscaling to robotically scale the variety of software nodes to match the queue’s workload. Every little thing appears to run positive for months already, however does it?

The Google cloud makes use of a pay-per-use mannequin, so throwing in additional software nodes to spice up your software’s efficiency comes at a value. So, we determined to check out our recipe on this software to see if there’s something to achieve right here. There actually was, so learn on.

Earlier than

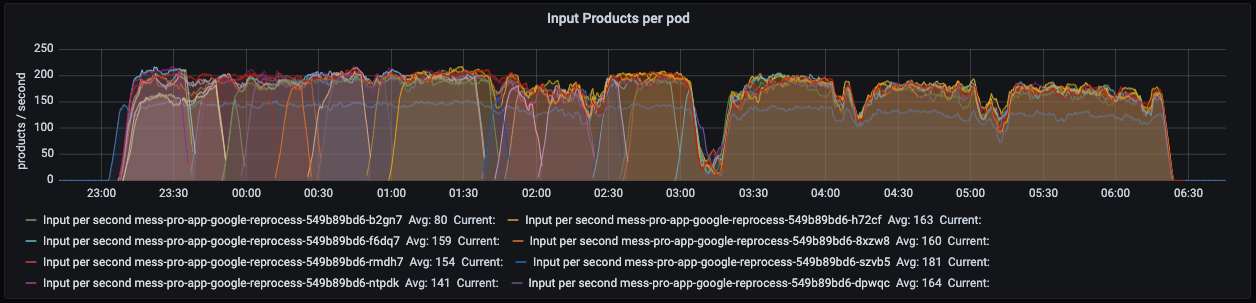

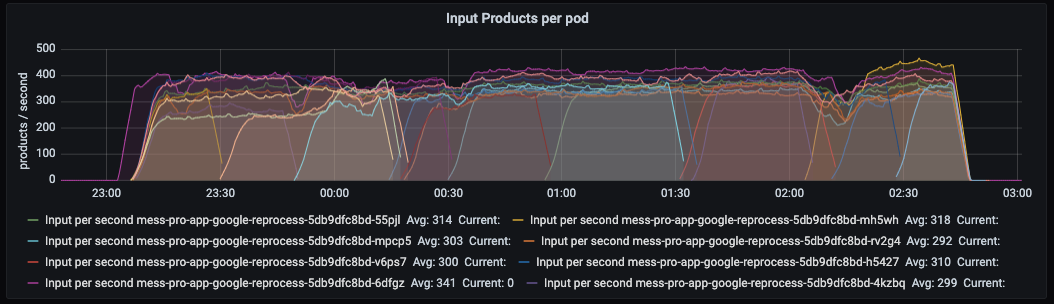

To ascertain a baseline, we ran a efficiency check to get insights into the applying’s key efficiency metrics. We additionally downloaded the applying’s GC logs to study extra about how the GC behaves. The under Grafana dashboard reveals what number of components (merchandise) every software node processes per second: max 200 on this case.

These are the volumes we’re used to, so all good. Nonetheless, whereas inspecting the GC logs, we discovered one thing that shocked us.

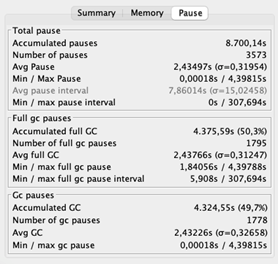

The typical pause time is 2,43 seconds. Recall that in pauses, the applying is unresponsive. Lengthy delays don’t must be a difficulty for a streaming software as a result of it doesn’t have to answer shoppers’ requests. The stunning half is its throughput of 69%, which implies that the applying spends 31% of its time wiping out reminiscence. That’s 31% not being spent on area logic. Ideally, the throughput must be at the very least 95%.

Figuring out the dwell knowledge measurement

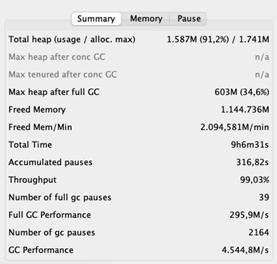

Allow us to see if we are able to make this higher. We decide the LDS by triggering a full rubbish accumulate whereas the applying is below load. Our software was performing so dangerous that it already carried out full collects – this usually signifies that the GC is in hassle. On the intense facet, we do not have to set off a full accumulate manually to determine the LDS.

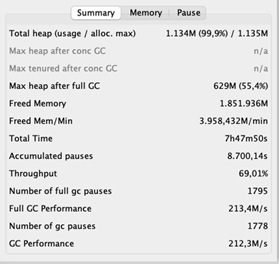

We distilled that the max heap measurement after a full GC is roughly 630MB. Making use of our rule of thumb yields a heap of 630 / 0.3 = 2100MB. That’s virtually twice the dimensions of our present heap of 1135MB!

After

Inquisitive about what this might do to our software, we elevated the heap to 2100MB and fired up our efficiency checks as soon as extra. The outcomes excited us.

After rising the heap, the common GC pauses decreased lots. Additionally, the GC’s throughput improved dramatically – 99% of the time the applying is doing what it’s meant to do. And the throughput of the applying, you ask? Recall that earlier than, the applying processed 200 components per second at most. Now it peaks at 400 per second!

Spicing up a high-load, low-latency REST service

Quiz query. You might have a low-latency, high-load service working on 42 digital machines, every having 2 CPU cores. Sometime, you migrate your software nodes to 5 beasts of bodily servers, every having 32 CPU cores. Given that every digital machine had a heap of 2GB, what measurement ought to it’s for every bodily server?

So, you will need to divide 42 * 2 = 84GB of complete reminiscence over 5 machines. That boils right down to 84 / 5 = 16.8GB per machine. To take no possibilities, you spherical this quantity as much as 25GB. Sounds believable, proper? Effectively, the right reply seems to be lower than 2GB, as a result of that’s the quantity we bought by calculating the heap measurement primarily based on the LDS. Can’t imagine it? No worries, we couldn’t imagine it both. Due to this fact, we determined to run an experiment.

Experiment setup

We’ve 5 software nodes, so we are able to run our experiment with 5 differently-sized heaps. We give node one 2GB, node two 4GB, node three 8GB, node 4 12GB, and node 5 25GB. (Sure, we aren’t courageous sufficient to run our software with a heap below 2GB.)

As a subsequent step, we fireplace up our efficiency checks producing a secure, production-like load of a baffling 56K requests per second. All through the entire run of this experiment, we measure the variety of requests every node receives to make sure that the load is equally balanced. What’s extra, we measure this service’s key efficiency indicator – latency.

As a result of we bought weary of downloading the GC logs after every check, we invested in Grafana dashboards to point out us the GC’s pause instances, throughput, and heap measurement after a rubbish accumulate. This manner we are able to simply examine the GC’s well being.

Outcomes

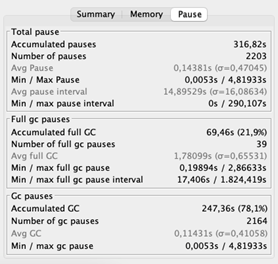

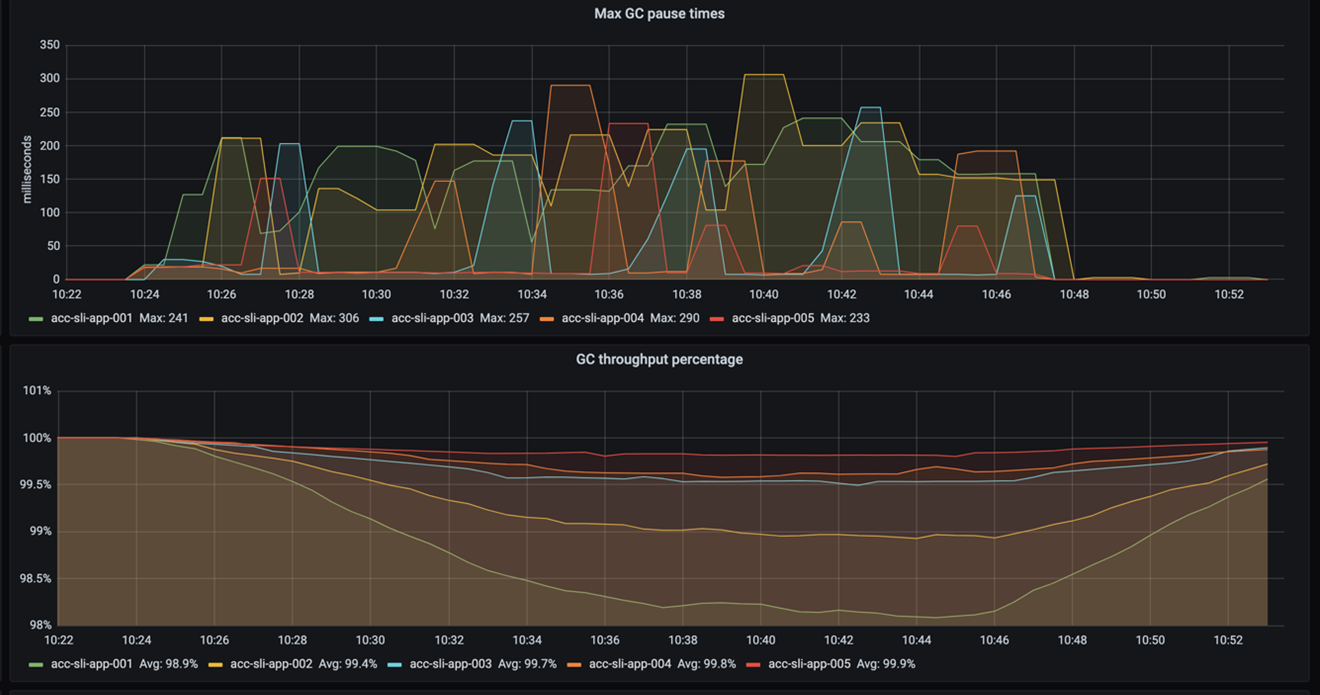

This weblog is about GC tuning, so let’s begin with that. The next determine reveals the GC’s pause instances and throughput. Recall that pause instances point out how lengthy the GC freezes the applying whereas sweeping out reminiscence. Throughput then specifies the proportion of time the applying will not be paused by the GC.

As you possibly can see, the pause frequency and pause instances don’t differ a lot. The throughput reveals it finest: the smaller the heap, the extra the GC pauses. It additionally reveals that even with a 2GB heap the throughput remains to be OK – it doesn’t drop below 98%. (Recall {that a} throughput greater than 95% is taken into account good.)

So, rising a 2GB heap by 23GB will increase the throughput by virtually 2%. That makes us marvel, how important is that for the general software’s efficiency? For the reply, we have to take a look at the applying’s latency.

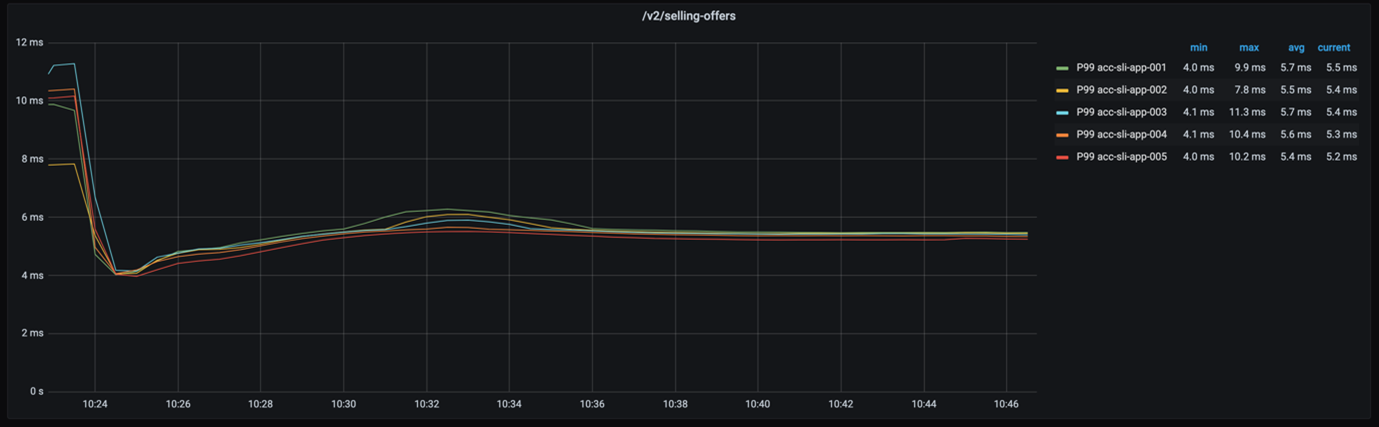

If we take a look at the 99-percentile latency of every node – as proven within the under graph – we see that the response instances are actually shut.

Even when we think about the 999-percentile, the response instances of every node are nonetheless not very far aside, as the next graph reveals.

How does the drop of just about 2% in GC throughput have an effect on our software’s total efficiency? Not a lot. And that’s nice as a result of it means two issues. First, the straightforward recipe for GC tuning labored once more. Second, we simply saved a whopping 115GB of reminiscence!

Conclusion

We defined a easy recipe of GC tuning that served two functions. By rising the heap, we gained two instances higher throughput for a streaming software. We lowered the reminiscence footprint of a REST service greater than ten-fold with out compromising its latency. All of that we completed by following these steps:

• Run the applying below load.

• Decide the dwell knowledge measurement (the dimensions of the objects your software nonetheless makes use of).

• Dimension the heap such that the LDS takes 30% of the full heap measurement.

Hopefully, we satisfied you that GC tuning does not must be daunting. So, deliver your personal components and begin cooking. We hope the end result might be as spicy as ours.

Credit

Many because of Alexander Bolhuis, Ramin Gomari, Tomas Sirio and Deny Rubinskyi for serving to us run the experiments. We couldn’t have written this weblog submit with out you guys.

![The Most Visited Websites in the World [Infographic]](https://newselfnewlife.com/wp-content/uploads/2025/05/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9tb3N0X3Zpc2l0ZWRfd2Vic2l0ZXMyLnBuZw.webp-120x86.webp)