Researchers on the Technical College of Munich (TUM) have developed autonomous driving software program which distributes threat on the road in a good method. The algorithm contained within the software program is taken into account to be the primary to include the 20 ethics suggestions of the EU Fee skilled group, thus making considerably extra differentiated choices than earlier algorithms.

Operation of automated autos is to be made considerably safer by assessing the various levels of threat to pedestrians and motorists. The code is obtainable to most of the people as Open Supply software program.

Technical realization just isn’t the one impediment to be mastered earlier than autonomously driving autos may be allowed on the road on a big scale. Moral questions play an essential position within the growth of the corresponding algorithms: Software program has to have the ability to deal with unforeseeable conditions and make the required choices in case of an impending accident.

Researchers at TUM have now developed the primary moral algorithm to pretty distribute the degrees of threat somewhat than working on an both/or precept. Roughly 2,000 situations involving important conditions have been examined, distributed throughout varied sorts of streets and areas comparable to Europe, the U.S. and China. The analysis work printed within the journal Nature Machine Intelligence is the joint results of a partnership between the TUM Chair of Automotive Expertise and the Chair of Enterprise Ethics on the TUM Institute for Ethics in Synthetic Intelligence (IEAI).

Maximilian Geisslinger, a scientist on the TUM Chair of Automotive Expertise, explains the strategy: “Till now, autonomous autos have been at all times confronted with an both/or alternative when encountering an moral resolution. However road visitors cannot essentially be divided into clear-cut, black and white conditions; far more, the numerous grey shades in between must be thought of as properly. Our algorithm weighs varied dangers and makes an moral alternative from amongst 1000’s of potential behaviors—and does so in a matter of solely a fraction of a second.”

Extra choices in important conditions

The fundamental moral parameters on which the software program’s threat analysis is oriented have been outlined by an skilled panel as a written suggestion on behalf of the EU Fee in 2020. The advice contains primary rules comparable to precedence for the worst-off and the truthful distribution of threat amongst all street customers. As a way to translate these guidelines into mathematical calculations, the analysis staff categorized autos and individuals transferring in road visitors based mostly on the danger they current to others and on the respective willingness to take dangers.

A truck for instance may cause critical injury to different visitors individuals, whereas in lots of situations the truck itself will solely expertise minor injury. The alternative is the case for a bicycle. Within the subsequent step the algorithm was informed to not exceed a most acceptable threat within the varied respective road conditions. As well as, the analysis staff added variables to the calculation which account for duty on the a part of the visitors individuals, for instance the duty to obey visitors rules.

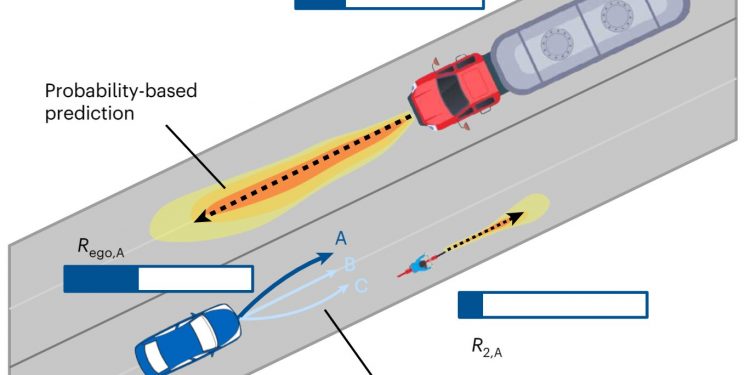

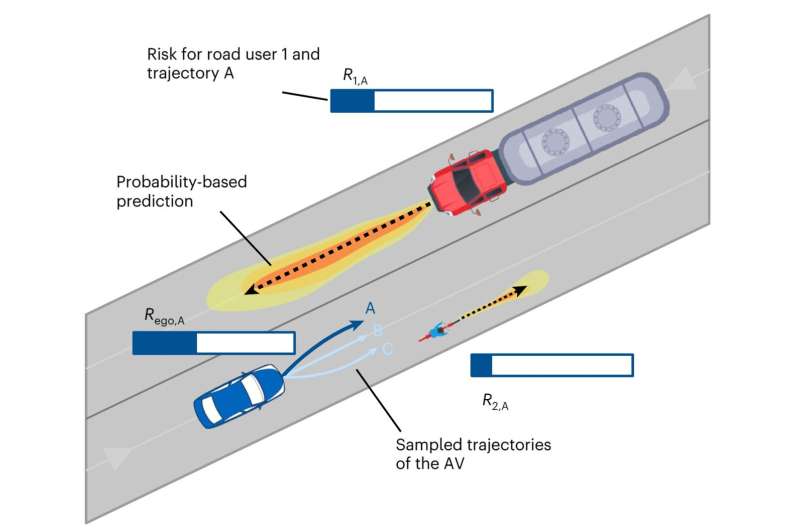

Earlier approaches handled important conditions on the road with solely a small variety of potential maneuvers; in unclear instances the automobile merely stopped. The danger evaluation now built-in within the researchers’ code ends in extra potential levels of freedom with much less threat for all. An instance will illustrate the strategy: An autonomous automobile desires to overhaul a bicycle, whereas a truck is approaching within the oncoming lane. All the prevailing knowledge on the environment and the person individuals are actually utilized.

Can the bicycle be overtaken with out driving within the oncoming visitors lane and on the identical time sustaining a protected distance to the bicycle? What’s the threat posed to every respective automobile, and what threat do these autos represent to the autonomous automobile itself? In unclear instances the autonomous automobile with the brand new software program at all times waits till the danger to all individuals is suitable. Aggressive maneuvers are averted, whereas on the identical time the autonomous automobile would not merely freeze up and abruptly jam on the brakes. Sure and No are irrelevant, changed by an analysis containing a lot of choices.

‘The only real consideration of conventional moral theories resulted in a lifeless finish’

“Till now, usually conventional moral theories have been contemplated to derive morally permissible choices made by autonomous autos. This finally led to a lifeless finish, since in lots of visitors conditions there was no different different than to violate one moral precept,” says Franziska Poszler, scientist on the TUM Chair of Enterprise Ethics. “In distinction, our framework places the ethics of threat on the heart. This permits us to consider possibilities to make extra differentiated assessments.”

The researchers emphasised the truth that even algorithms which might be based mostly on threat ethics—though they’ll make choices based mostly on the underlying moral rules in each potential visitors state of affairs—they nonetheless can’t assure accident-free road visitors. Sooner or later it can moreover be essential to contemplate additional differentiations comparable to cultural variations in moral decision-making.

Till now the algorithm developed at TUM has been validated in simulations. Sooner or later the software program might be examined on the road utilizing the analysis automobile EDGAR. The code embodying the findings of the analysis actions is obtainable as Open Supply software program. TUM is thus contributing to the event of viable and protected autonomous autos.

Extra data:

Maximilian Geisslinger et al, An moral trajectory planning algorithm for autonomous autos, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-022-00607-z

Mission “ANDRE—AutoNomous DRiving Ethics”: www.ieai.sot.tum.de/analysis/a … mous-driving-ethics/

Code: github.com/TUMFTM/EthicalTrajectoryPlanning

Technical College Munich

Quotation:

Autonomous driving: New algorithm distributes threat pretty (2023, February 3)

retrieved 3 February 2023

from https://techxplore.com/information/2023-02-autonomous-algorithm.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is offered for data functions solely.