“Too many requests! You will have made too many requests!! No extra requests!!!” – What number of instances do you must hear that earlier than you get actually indignant? Amazon Chime API’s request throttling examined our persistence like this. However all we ever needed was to make a easy textual content chat app work! On this article, you’ll discover out why Chime was so unkind to us, what we did to show issues round, and the way you can also comply with the trail we solid.

Request throttling shouldn’t be at all times on the forefront of the discussions about Amazon Internet Companies inc providers and instruments as it’s hardly ever a significant trigger for concern when creating a cloud-based system. Normally, each enterprise and growth focus extra on the quantity of reminiscence and storage they’ll use.

Nevertheless, in a current venture of mine, the problem of request throttling on AWS turned one in all our most extreme bottlenecks, turning the seemingly easy activity of including a textual content chat function into fairly a posh resolution filled with artistic workarounds.

How did my workforce and I discover a means round request throttling to save lots of a high traffic textual content chat utility?

You’ll discover the reply on this sensible case research.

Background – what sort of textual content chat did the shopper want?

To make the matter clear, I must let you know a factor or two in regards to the shopper I started working for.

The shopper

The COVID pandemic triggered every kind of troubles and thwarted lots of plans of each people and corporations. Numerous individuals missed out on essential gatherings, vacation journeys, and household time, to not point out long-awaited concert events, workshops, artwork exhibits, and stay theatre occasions.

Certainly one of my purchasers got here up with an answer – an on-line streaming platform that allows artists to prepare and conduct their occasions remotely for the enjoyment of their paying followers. Basically, it made it potential to carry an enormous a part of the stay expertise to the online. It additionally opened up new alternatives in a post-COVID world, making artwork extra accessible to many individuals, wherever they could be.

The shopper seen that performing and not using a public to take a look at and work together with might get type of awkward for artists. To offer a means for performers and followers to work together, we determined to equip the platform with a textual content chat.

The idea – what’s throttling all about?

Earlier than we get to the app, let’s overview the speculation behind the request throttling restrict.

As you in all probability know, throttling is the method of limiting the variety of requests you (or your licensed developer) can undergo a given operation in a given period of time.

A request could be while you submit a listing feed or while you make an order report request.

The time period „API Request Throttling” used all through this text refers to a quota put by an API proprietor on the variety of requests a specific person could make in a sure interval (throttling limits).

That means, you retain the quantity of workload put in your providers in examine, making certain that extra customers can benefit from the system on the identical time. In any case, it is very important share, isn’t it?

What about request throttling on the AWS cloud?

In my view, the documentation of EC2, some of the fashionable AWS providers, has fairly a satisfying reply:

Amazon EC2 throttles EC2 API requests per account on a per-Area foundation. The purpose right here is to enhance the efficiency of the service and guarantee truthful utilization for all Amazon EC2 clients. Throttling makes positive that calls to the Amazon EC2 API don’t exceed the utmost allowed API request limits.

Every AWS service has its personal guidelines defining the variety of requests one could make.

Problem – not your common textual content chat

If you learn the title, you might need thought to your self: “A textual content chat? What’s so troublesome about that?”.

Effectively, as any senior developer would let you know, absolutely anything could be troublesome – relying on the skin components.

And it simply so occurs that this outdoors issue was particular.

Not your common, on a regular basis textual content chat

For the longest time, the AWS-based infrastructure of the platform had no points with throttling. It began displaying up in the course of the textual content chat implementation of the Amazon Chime communications service.

In a typical chat app, you give customers the flexibility to register and select chat channels they want to be a part of. Ours labored a bit otherwise.

On this one, every chat participant was to be distinguished by the exact same ticket code they used to realize entry to the web occasion stream.

Sadly, the ticket knowledge downloaded from our shopper’s ticketing system didn’t enable us to inform if a specific ticket belonged to a returning person. Due to that, we couldn’t assign earlier chat person accounts to the ticket. Because of this, we needed to create them from scratch for every occasion.

Decelerate there, cowboy!

Proper after downloading over a thousand tickets price of knowledge from our shopper’s system, we tried to create Chime sources for all of them.

Virtually instantly, we have been reduce off by the throttling error message because of the excessive load, far exceeding the allowed most variety of 10 requests per second. We have now encountered the identical drawback of most capability when making an attempt to delete sources once they have been now not wanted.

All of our issues stemmed from the truth that we didn’t instantly confirm the variety of requests we are able to make to the Amazon Chime API.

Gradual and regular wins the race?

„What about utilizing bulk strategies?”, you would possibly ask. Effectively, on the time of writing this text there weren’t a lot of these out there.

Out of the 8 batch operations listed within the Amazon Chime API reference, we ended up utilizing just one – the flexibility to create a number of channel memberships (with a restrict of 100 memberships created at a time).

Even record operations are restricted to fetching 50 objects at a time, which made it troublesome for us to maintain up with the boundaries regarding the variety of customers the chat resolution would deal with.

At this level, we determined to attempt to create each person individually.

The answer to request throttling for Amazon Chime

Clearly, we needed to alter our expectations relating to the pace at which this course of would function.

At 10 requests per second, we might create nearly the identical variety of customers (together with temporary breaks for creating channels, moderators, batch memberships, and so forth). Fortunately, our shopper was fantastic with ready a bit extra.

Each occasion was arrange prematurely, giving our resolution sufficient time to create the whole lot earlier than the occasion even begins.

We additionally needed to ensure that for every ticket just one person could be created since Chime restricts the variety of these as properly.

Our resolution was to arrange a separate EC2 occasion, which created Chime sources asynchronously to the method of organising an occasion and downloading tickets, limiting the shopper’s name depend.

AWS API throttling resolution – implementation

To start with, the setup features a CRON job scheduled to run each minute.

This job has built-in safety from operating twice if the earlier job didn’t end in time. One other layer of safety from operating a job twice is having it run on a single EC2 occasion, with out upwards scalability.

Operating createChimeUsers technique retrieves a set quantity of tickets from a personal database (150-200 labored greatest for us).

And for every ticket we retrieved from our database, we’re making a chat person.

We have now used a package deal known as throttled-queue as our throttling handler.

The throttledQueue parameters are liable for the next configuration of Chime API calls:

- variety of most requests we want to carry out per given period of time,

- time (in milliseconds) throughout which various requests as much as a most can be carried out,

- boolean flag figuring out whether or not requests needs to be unfold out evenly in time (true) or ought to run as quickly as potential (false).

Within the given instance, adhering to Amazon Chime limitations, we’re making 10 calls per second, evenly unfold out all through this time.

You need extra AWS insights? Discover out about our developer’s expertise with API Gateway, together with API Gateway console, REST API, auto scaling, burst restrict, and extra.

Venture deliverables

So what did we obtain on account of doing all that?

Know-how

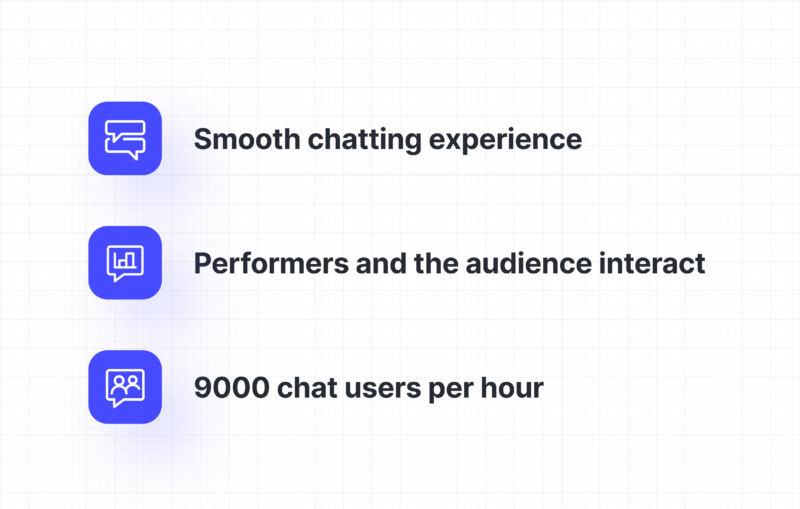

So far as tangible deliverables go, we achieved the next:

- Service obligations have been break up. Because of this, the separation of considerations for textual content chat and ticket retrieval has been achieved.

- The ticket retrieval system doesn’t concern itself with textual content chat anymore. Consequently, throttling or every other AWS-related points don’t affect this perform anymore.

- The brand new textual content chat service handles all the requests to Amazon Chime. Extracting this from a scalable service to a non-scalable one ensures that no duplicates of Chime sources can be created.

What’s even higher, our success made a noticeable distinction for the product itself!

Enterprise

Now, that the request throttling subject has been resolved:

- A easy chatting expertise for finish customers has been achieved. There have been no detected points when it comes to lacking chat customers or inaccessible chat.

- Performers can work together with the viewers undisturbed by technical limitations.

- With the present configuration, we’re capable of create 9000 (!) chat customers per hour.

As you’ll be able to see, discovering the customized means round Chime’s request throttling resulted in lots of tangible advantages for the shopper, with out having to search for a wholly new textual content chat resolution.

Textual content chat & request throttling points on AWS – classes discovered

And that’s how my workforce and I managed to work across the subject of AWS API throttiling in Amazon Chime, scoring a few wins for the purchasers within the course of.

The problem taught us, or ought to I say – reminded us of some classes:

- Regardless of providing lots of advantages for the effectivity and stability of your infrastructure, AWS isn’t just a present that retains on giving. It’s very important to keep in mind that all AWS customers have the duty to comply with all the principles in order that sources are shared pretty.

- Earlier than you select a specific AWS service, you need to take note of all the boundaries relating to effectivity, safety, request dealing with, and others set by the supplier.

- Within the case of cloud-based architectures, rising the effectivity (that’s, the variety of requests per second) could also be both inconceivable or very pricey. It’s essential to concentrate on what you are able to do together with your cloud service on the design stage with a purpose to keep away from issues afterward.

For those who can comply with these pointers, you shouldn’t come throughout any surprises in the course of the implementation of a textual content chat or every other app or performance.

Do you need to work on AWS instances like this with us?

The Software program Home gives a number of such alternatives for formidable builders that love difficult cloud tasks.

![8 Best No-Code App Builders Anyone Can Use [Article]](https://newselfnewlife.com/wp-content/uploads/2025/10/woliul-hasan-JwA3pHJeWg8-unsplash-scaled-360x180.jpg)