We requested 25 builders, 5 tech leads and somebody “who does unspeakable issues with know-how” – extra generally referred to as our precept tech lead which applied sciences (instruments, libraries, language or frameworks) they consider are hyped, occurring or occurred:

- hyped – which new applied sciences are you desirous to study extra about this 12 months?

- occurring – are there any thrilling applied sciences you used final 12 months that we should always study this 12 months?

- occurred – did you stroll away from any applied sciences final 12 months?

That is what we acquired again:

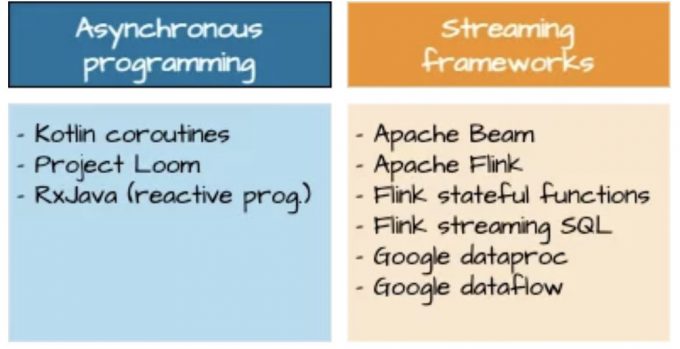

This weblog publish, which is a part of a collection of six weblog posts in whole, we’ve a more in-depth take a look at asynchronous programming and knowledge streaming frameworks:

Go to The hyped, occurring and occurred tech @ bol.com for a whole overview of all of the hyped, occurring and occurred tech at bol.com.

Keep in mind these good (?) outdated instances after we began our compilation job – went for a espresso – and continued the following activity solely after the compiler completed? Think about what would occur if we’ve the identical means of working when fetching knowledge from completely different sources? We stay in a microservice world the place we’ve to assemble internet pages utilizing completely different items of data coming from quite a lot of completely different sources. It’s 2021, persons are impatient and we would like our outcomes delivered to our clients inside lots of of milliseconds. So, asynchronous (and reactive) programming – which facilitates us to run a number of duties in parallel – continues to be hip and occurring as we speak.

Earlier than we will really recognize what Java’s mission Loom and Kotlin’s coroutines convey, we first want to know two ideas: light-weight versus heavyweight threading and preemptive versus cooperative scheduling. So, allow us to clarify these ideas first.

Light-weight versus heavyweight threads

To know what light-weight threads are, allow us to first perceive its reverse: heavyweight threads. When making a thread in Java, the JVM performs a local name to the working system (OS) to create a kernel thread. Every kernel thread usually has its personal program counter, registers and a name stack – all of which we discuss with because the thread’s context. To modify from kernel thread A to kernel thread B is to save lots of the context of thread A and restore the one in every of thread B. That is normally a comparatively costly – or heavy – operation.

The principle aim of light-weight threading is to cut back context switching. It’s onerous to give you a crisp definition of what a light-weight (or heavyweight) thread is. Informally we are saying that the extra context concerned when switching between threads, the heavier it’s.

Preemptive versus cooperative scheduling

It’s potential to create extra threads than CPU cores, which means that not all threads can run concurrently. Consequently, a call must be made which thread can run on the CPU when. As you in all probability guessed there are two methods of scheduling threads: preemptive and cooperative scheduling.

In preemptive scheduling it’s the scheduler who decides which thread is allowed to run on the CPU. Threads which might be at the moment working are forcibly suspended and threads which might be ready for the CPU are resumed. The thread itself has no say in when it will likely be suspended or resumed.

In cooperative scheduling – versus preemptive scheduling – it isn’t the scheduler who forces the threads to droop, however the threads themselves management when they are going to be suspended. As soon as a thread runs it is going to proceed doing so till it explicitly relinquishes management of the CPU.

Kotlin – coroutines

Kotlin’s help for lightweight threads comes within the type of coroutines. Coroutines enable us to implement asynchronous functions in a fluent means. Earlier than we proceed, it’s truthful to say that Kotlin coroutines supply greater than light-weight concurrency, reminiscent of channels for inter-coroutine communication. The wealth of those extra constructs would possibly already be a motive so that you can use coroutines. Nevertheless, a dialogue of those ideas is past the scope of this weblog publish.

At first look a coroutine would possibly look similar to a Java thread. One massive distinction with Java threads is, nonetheless, that coroutines include programming constructs to explicitly relinquish management of the CPU. That’s to say, coroutines are cooperatively scheduled, whereas conventional Java threads are preemptively scheduled.

Coroutines’ cooperative means of yielding management to the CPU allows Kotlin to make use of kernel threads extra effectively by working a number of coroutines on a single kernel thread. Be aware that this contrasts conventional Java threads which map one-on-one to kernel threads. This attribute makes Kotlin coroutines extra light-weight than Java threads.

Java – Undertaking Loom

Undertaking Loom goals to convey light-weight threads – known as digital threads – to the Java platform by decoupling Java threads from the heavyweight kernel threads. In that respect mission Loom and Kotlin coroutines pursue the identical aim – they each purpose to convey light-weight threading to the JVM. In distinction to Kotlin coroutines which might be cooperatively scheduled, mission Loom’s threads stay preemptively scheduled.

Undertaking Loom continues to be in its experimental section, which means that light-weight threads in Java are nonetheless not occurring as we speak. One would possibly ask what mission Loom will entail for Kotlin coroutines. Will digital threads make Kotlin coroutines out of date? May digital threads function a foundation upon which Kotlin coroutines are constructed? Or will we be left with two co-existing threading fashions – digital threads and coroutines? It’s precisely these questions which might be additionally mentioned by the Kotlin developer neighborhood (see for instance the next dialogue on the Kotlin discussion board: https://talk about.kotlinlang.org/t/project-loom-will-make-coroutines-obsolete/17833/5).

“[Project Loom] can allow a lot better performing concurrent code in functions which deal lots with ready for I/O (eg. HTTP calls to different techniques).”

Reactive programming – RxJava

Reactive programming affords one more option to keep away from the prices of switching between completely different threads. As a substitute of making a thread for each activity, within the reactive paradigm duties are executed by a devoted thread that’s run and managed by the reactive framework. Usually, there’s one devoted thread per CPU core. So, as a programmer you don’t have to fret about switching between threads.

Not having to fret about threads and concurrency might sound easy. Nevertheless, reactive programming is radically completely different from extra conventional (crucial) methods of programming wherein we outline sequences of directions to execute. Reactive programming is predicated on a extra purposeful fashion. You outline chains of operations that set off in response to asynchronous knowledge. For those unfamiliar with reactive programming it helps to consider these chains of operations as Java 8 streams that enable us to filter, map and flatMap gadgets of knowledge that circulation by means of.

Reactive programming frameworks supply extra than simply operations to control knowledge streams although. The reactive framework selects a free thread from the pool to execute your code as quickly as incoming knowledge is noticed and releases the thread as quickly as your code completed, thereby relieving you from ache factors reminiscent of threading, synchronization and non-blocking I/O.

Not being bothered with concurrency and all the problems it brings sounds nice. Nevertheless, in our expertise reactive programming does improve the extent of complexity of your code. As a consequence, your code turns into more durable to check and preserve. That’s in all probability the rationale why some persons are strolling away from reactive frameworks reminiscent of RxJava.

We’ve got entry to terabytes of knowledge. Knowledge about which pages our clients go to, which hyperlinks they click on on and which merchandise they order. This info helps us to maintain bettering our service and to remain forward of our competitors. The sooner we’ve that info, the earlier we will act upon it.

Knowledge streaming helps us carry out advanced operations on giant quantities of (constantly) incoming knowledge coming from completely different enter sources. Streaming frameworks present constructs for outlining dataflows (or pipeline) for aggregating and manipulating the information in a real-time method.

Does this all sound summary to you? Then it’d assist to visualise a dataflow as one thing that’s fairly just like a Java 8 stream. As a result of just like a Java 8 stream we map, filter and mixture knowledge. The distinction is that dataflows course of a lot bigger portions of knowledge than Java 8 streams usually do. The place a Java 8 stream runs on a single JVM, a streaming pipeline is managed by a streaming engine that runs it on completely different nodes in a cluster. That is notably helpful when the information doesn’t match on a single machine – one thing that’s true for the quantities of knowledge we course of.

Apache Beam and Dataflow

There are lots of streaming frameworks to select from, for instance Apache Flink, Apache Spark, Apache Storm and Google Dataflow. Every streaming framework comes with its personal API for outlining streaming pipelines. As a consequence, it isn’t potential to run, for instance, an Apache Flink pipeline on a Google Dataflow engine. To have the ability to accomplish that it’s a must to translate your Flink pipeline to one thing Dataflow understands. Solely then you may run it on the Dataflow streaming engine.

Apache Beam overcomes this limitation by defining a unifying API. With this API you may outline pipelines that run on a number of streaming platforms. Actually, Apache Beam doesn’t include its personal streaming engine. (It affords one for working on small knowledge units on an area machine.) As a substitute, it makes use of the streaming engine of your selection. This may be, amongst others, Apache Flink or Google Dataflow.

At this level you would possibly surprise why we put Dataflow and Apache Beam collectively. Dataflow is nothing greater than a streaming engine to run streaming pipelines. Nicely, you usually outline your streaming pipelines in Apache Beam. It’s because Google Dataflow doesn’t include its personal API for outlining streaming jobs, however makes use of Apache Beam’s mannequin as a substitute.

Despite the fact that a streaming engine manages the distribution of the information amongst completely different nodes for you, you’re nonetheless tasked with establishing the cluster the place these nodes run on, your self. With Dataflow, nonetheless, you don’t have to fret about issues like configuring the variety of staff a pipeline runs on or scaling up staff because the incoming knowledge will increase. Google Dataflow takes all that sorrow away from you.

Flink

Apache Flink – a dependable and scalable streaming framework that’s able to performing computations at in-memory pace – has been satisfying many groups’ knowledge starvation for some time now. Its excellently documented core ideas and responsive neighborhood are amongst its sturdy factors.

One other benefit is that Flink affords far more performance than Apache Beam, which has to strike a superb stability between portability throughout completely different streaming platforms and provided performance. Flink goes past the streaming API you can see in Apache Beam. It affords, for instance:

- stateful capabilities that can be utilized for constructing distributed stateful functions, and

- the desk and SQL API as an additional layer of abstraction on high of Flink’s knowledge streaming API that lets you question your knowledge sources with an SQL like syntax.

Why are we nonetheless utilizing Apache Beam then? Nicely, to run an Apache Flink utility, you should preserve your personal cluster. And that could be a clear drawback over Google Dataflow / Apache Beam with which you could have a completely scalable, knowledge streaming utility working within the cloud very quickly. That is precisely the rationale why my group selected Apache Beam over Apache Flink some time in the past.

Google Dataproc

Google Dataproc is a completely managed cloud service for working:

- Apache Spark

- Apache Flink

- and extra (knowledge streaming) instruments and frameworks.

That’s excellent news, as a result of this unleashes the ability of Apache Flink on the cloud. What would this do with Apache Beam’s recognition? Would we see Apache Flink’s recognition rise on the expense of Apache Beam’s? Nicely, we will solely speculate.

Wish to learn extra about hyped, occurring and occurred tech at bol.com? Learn all about The hyped, occurring and occurred entrance finish frameworks and internet APIs @ bol.com or return to The hyped, occurring and occurred tech @ bol.com for a whole overview of all of the hyped, occurring and occurred tech at bol.com.