ETH Laptop scientists have developed a brand new AI resolution that allows touchscreens to sense with eight instances greater decision than present gadgets. Due to AI, their resolution can infer way more exactly the place fingers contact the display.

Rapidly typing a message on a smartphone generally leads to hitting the fallacious letters on the small keyboard or on different enter buttons in an app. The contact sensors that detect finger enter on the contact display haven’t modified a lot since they have been first launched in cell phones within the mid-2000s.

In distinction, the screens of smartphones and tablets at the moment are offering unprecedented visible high quality, which is much more evident with every new era of gadgets: greater coloration constancy, greater decision, crisper distinction. A contemporary-generation iPhone, for instance, has a show decision of 2532×1170 pixels. However the contact sensor it integrates can solely detect enter with a decision of round 32×15 pixels—that is nearly 80 instances decrease than the show decision: “And right here we’re, questioning why we make so many typing errors on the small keyboard? We predict that we must always have the ability to choose objects with pixel accuracy by means of contact, however that is actually not the case,” says Christian Holz, ETH pc science professor from the Sensing, Interplay & Notion Lab (SIPLAB) in an interview within the ETH Laptop Science Division’s “Spotlights” sequence.

Collectively together with his doctoral pupil Paul Streli, Holz has now developed a man-made intelligence (AI) known as CapContact that offers contact screens super-resolution in order that they will reliably detect when and the place fingers truly contact the show floor, with a lot greater accuracy than present gadgets do. This week they offered their new AI resolution at ACM CHI 2021, the premier convention in on Human Components in Computing Programs.

Recognising the place fingers contact the display

The ETH researchers developed the AI for capacitive contact screens, that are the sorts of contact screens utilized in all our cell phones, tablets, and laptops. The sensor detects the place of the fingers by the truth that {the electrical} discipline between the sensor traces adjustments because of the proximity of a finger when touching the display floor. As a result of capacitive sensing inherently captures this proximity, it can not truly detect true contact—which is adequate for interplay, nevertheless, as a result of the depth of a measured contact decreases exponentially with the rising distance of the fingers.

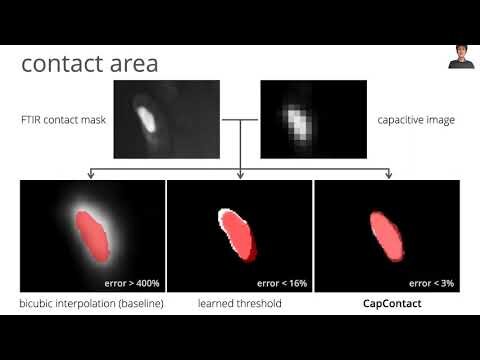

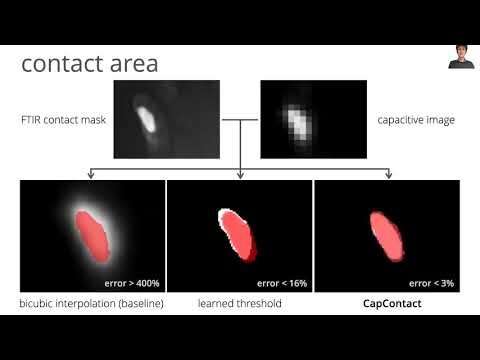

Capacitance sensing was by no means designed to deduce with pinpoint accuracy the place a contact truly happens on the display, says Holz: “It solely detects the proximity of our fingers.” The contact screens of as we speak’s gadgets thus interpolate the placement the place the enter is made with the finger from coarse proximity measurements. Of their undertaking, the researchers aimed to handle each shortcomings of those ubiquitous sensors: On the one hand, they needed to enhance the at the moment low decision of the sensors and, then again, they needed to learn the way to exactly infer the respective contact space between finger and show floor from the capacitive measurements.

Consequently, CapContact, the novel technique Streli and Holz developed for this objective, combines two approaches: On the one hand, they use the contact screens as picture sensors. In response to Holz, a contact display is basically a really low-resolution depth digicam that may see about eight millimeters far. A depth digicam doesn’t seize coloured photos, however data a picture of how shut objects are. Then again, CapContact exploits this perception to precisely detect the contact areas between fingers and surfaces by means of a novel deep studying algorithm that the researchers developed.

“First, “CapContact’ estimates the precise contact areas between fingers and touchscreens upon contact,” says Holz, “Second, it generates these contact areas at eight instances the decision of present contact sensors, enabling our contact gadgets to detect contact way more exactly.”

To coach the AI, the researchers constructed a customized equipment that data the capacitive intensities, i.e., the sorts of measurements our telephones and tablets file, and the true contact maps by means of an optical high-resolution strain sensor. By capturing a large number of touches from a number of take a look at individuals, the researchers captured a coaching dataset, from which CapContact discovered to foretell super-resolution contact areas from the coarse and low-resolution sensor knowledge of as we speak’s contact gadgets.

Low contact display decision as a supply of error

“In our paper, we present that from the contact space between your finger and a smartphone’s display as estimated by CapContact, we will derive touch-input areas with greater accuracy than present gadgets do,” provides Paul Streli. The researchers present that one-third of errors on present gadgets are because of the low-resolution enter sensing. CapContact can take away these errors by means of the researcher’s novel deep studying method.

The researchers additionally show that CapContact reliably distinguishes the contact surfaces even when fingers contact the display very shut collectively. That is the case, for instance, with the pinch gesture, if you transfer your thumb and index finger throughout a display to enlarge texts or photos. Right now’s gadgets can hardly distinguish close-by adjoining touches.

The findings of their undertaking now put the present {industry} customary of touchscreens into query. In one other experiment, the researchers used an excellent lower-resolution sensor than these put in in our cell phones as we speak. Nonetheless, CapContact detected the touches higher and was in a position to derive the enter areas with greater accuracy than present gadgets do at as we speak’s common decision. This means that the researchers’ AI resolution may pave the best way for a brand new contact sensing in future cell phones and tablets to function extra reliably and exactly, but at a diminished footprint and complexity when it comes to sensor manufacturing.

To permit others to construct on their outcomes, the researchers are releasing their skilled deep studying mannequin, code, and their dataset on their undertaking web page.

Apple patent discuss: Ultrasound-based power and contact sensing

Paul Streli et al. CapContact: Reconstructing Excessive-Decision Contact Areas on Capacitive Sensors, Proceedings of the 2021 CHI Convention on Human Components in Computing Programs (2021). DOI: 10.1145/3411764.3445621

Quotation:

Bettering contact screens with AI (2021, Might 14)

retrieved 15 Might 2021

from https://techxplore.com/information/2021-05-screens-ai.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for info functions solely.